I have long dreamed of attending an art exhibition that presented the full range of generative art starting with early analog works of the late 1950s and ranging all the way up to new AI work we have seen in just the last few years. To my knowledge, no such show has ever existed. Just to attend such a show would be a dream come true for me.

So when the Kate Vass galerie proposed that I co-curate a show on the history of generative art, I thought I had died and gone to heaven. While I love early generative art, especially artists like Vera Molnar and Frieder Nake, my passion is really centered around contemporary generative art. So pairing up with my good friend Georg Bak, expert in early generative photography, was the perfect match. Georg brings an unmatched passion and detailed understanding of early generative art that firmly plants this show in a deep and rich tradition that many have yet to learn about.

As my wife can attest, I have regularly been waking up at four in the morning and going to bed past midnight as we race to put together this historically significant show, unprecedented in its scope.

I couldn’t be more enthusiastic and proud of the show we are putting together and I am excited to share the official press release with you below:

Invitation for Automat Und Mensch (Man and Machine)

“This may sound paradoxical, but the machine, which is thought to be cold and inhuman, can help to realize what is most subjective, unattainable, and profound in a human being.” - Vera Molnar

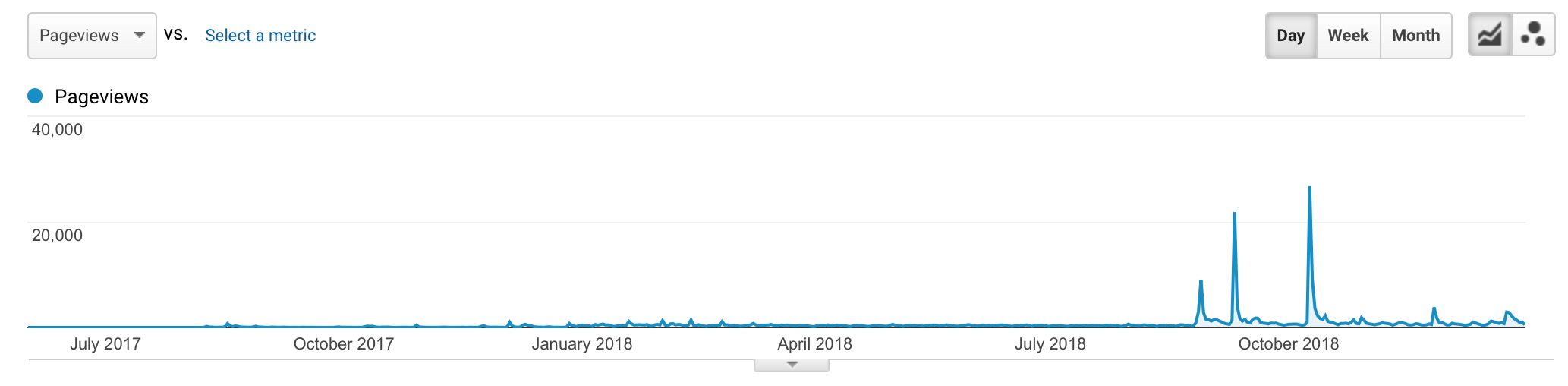

In the last twelve months we have seen a tremendous spike in the interest of “AI art,” ushered in by Christie’s and Sotheby’s both offering works at auction developed with machine learning. Capturing the imaginations of collectors and the general public alike, the new work has some conservative members of the art world scratching their heads and suggesting this will merely be another passing fad. What they are missing is that this rich genre, more broadly referred to as “generative art,” has a history as long and fascinating as computing itself. A history that has largely been overlooked in the recent mania for “AI art” and one that co-curators Georg Bak and Jason Bailey hope to shine a bright light on in their upcoming show Automat und Mensch (or Machine and Man) at Kate Vass Galerie in Zurich, Switzerland.

Generative art, once perceived as the domain of a small number of “computer nerds,” is now the artform best poised to capture what sets our generation apart from those that came before us - ubiquitous computing. As children of the digital revolution, computing has become our greatest shared experience. Like it or not, we are all now computer nerds, inseparable from the many devices through which we mediate our worlds.

Though slow to gain traction in the traditional art world, generative art produces elegant and compelling works that extend the very same principles and goals that analog artists have pursued from the inception of modern art. Geometry, abstraction, and chance are important themes not just for generative art, but for much of the important art of the 20th century.

Every generation claims art is dead, asking, “Where are our Michelangelos? Where are our Picassos?” only to have their grandchildren point out generations later that the geniuses were among us the whole time. With generative art we have the unique opportunity to celebrate the early masters while they are still here to experience it.

9 Analogue Graphics, Herbert W. Franke, 1956/’57

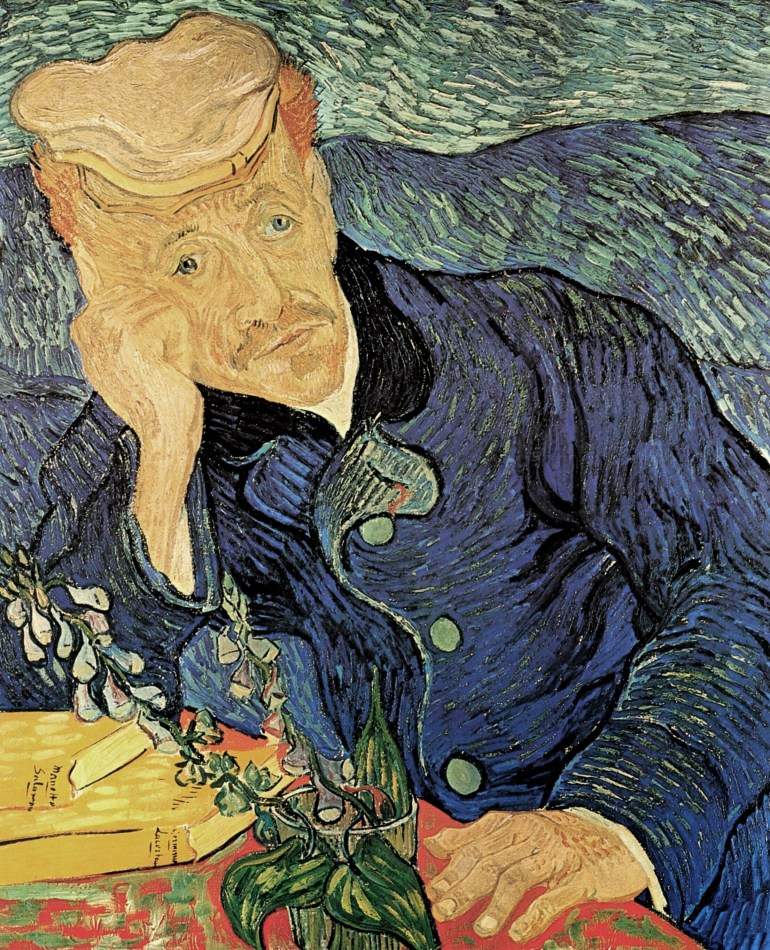

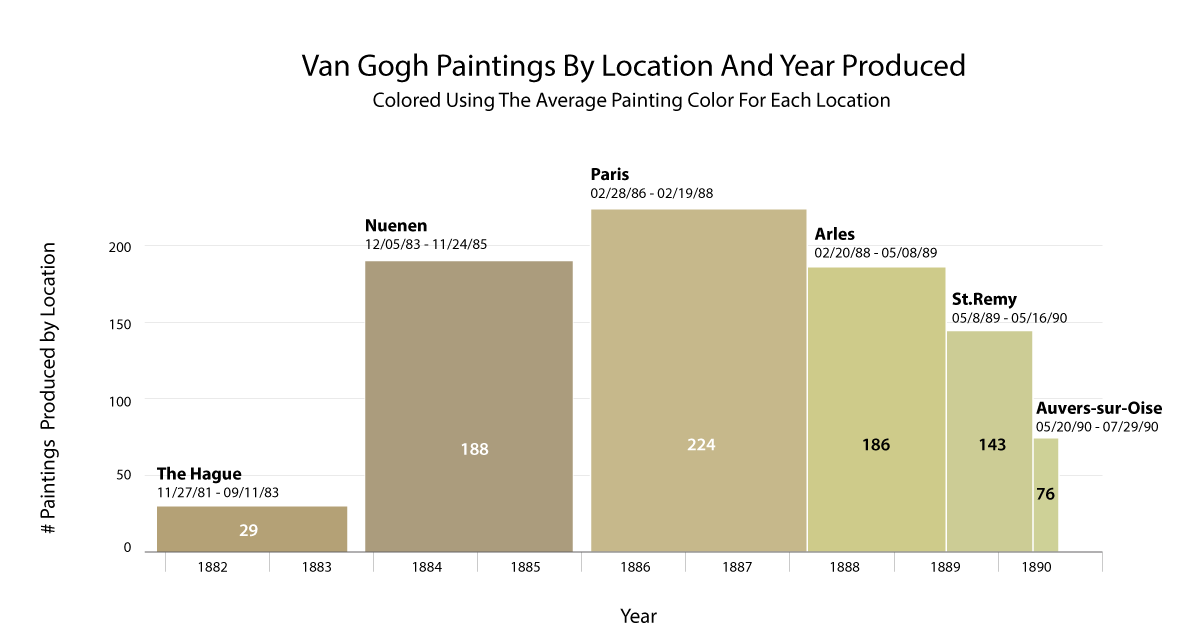

The Automat und Mensch (Man and Machine) exhibition is, above all, an opportunity to put important work by generative artists spanning the last 70 years into context by showing it in a single location. By juxtaposing important works like the 1956/’57 oscillograms by Herbert W. Franke (age 91) with the 2018 AI Generated Nude Portrait #1 by contemporary artist Robbie Barrat (age 19), we can see the full history and spectrum of generative art as has never been shown before.

Correction of Rubens: Saturn Devouring His Son, Robbie Barrat, 2019

Emphasizing the deep historical roots of AI and generative art, the show takes its title from the 1961 book of the same name by German computer scientist and media theorist Karl Steinbuch. The book contains important early writings on machine learning and was inspirational for early generative artists like Gottfried Jäger.

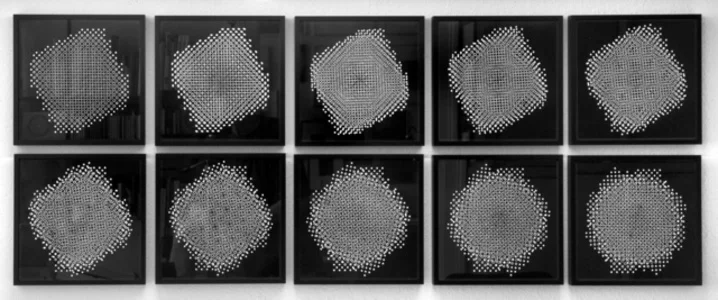

We will be including in the exhibition a set of 10 pinhole structures created by Jäger with a self-made pinhole camera obscura. Jäger, generally considered the father and founder of “generative photography,” was also the first to use the term “generative aesthetics” within the context of art history.

10 Pinhole Structures, Gottfried Jäger, 1967/’94

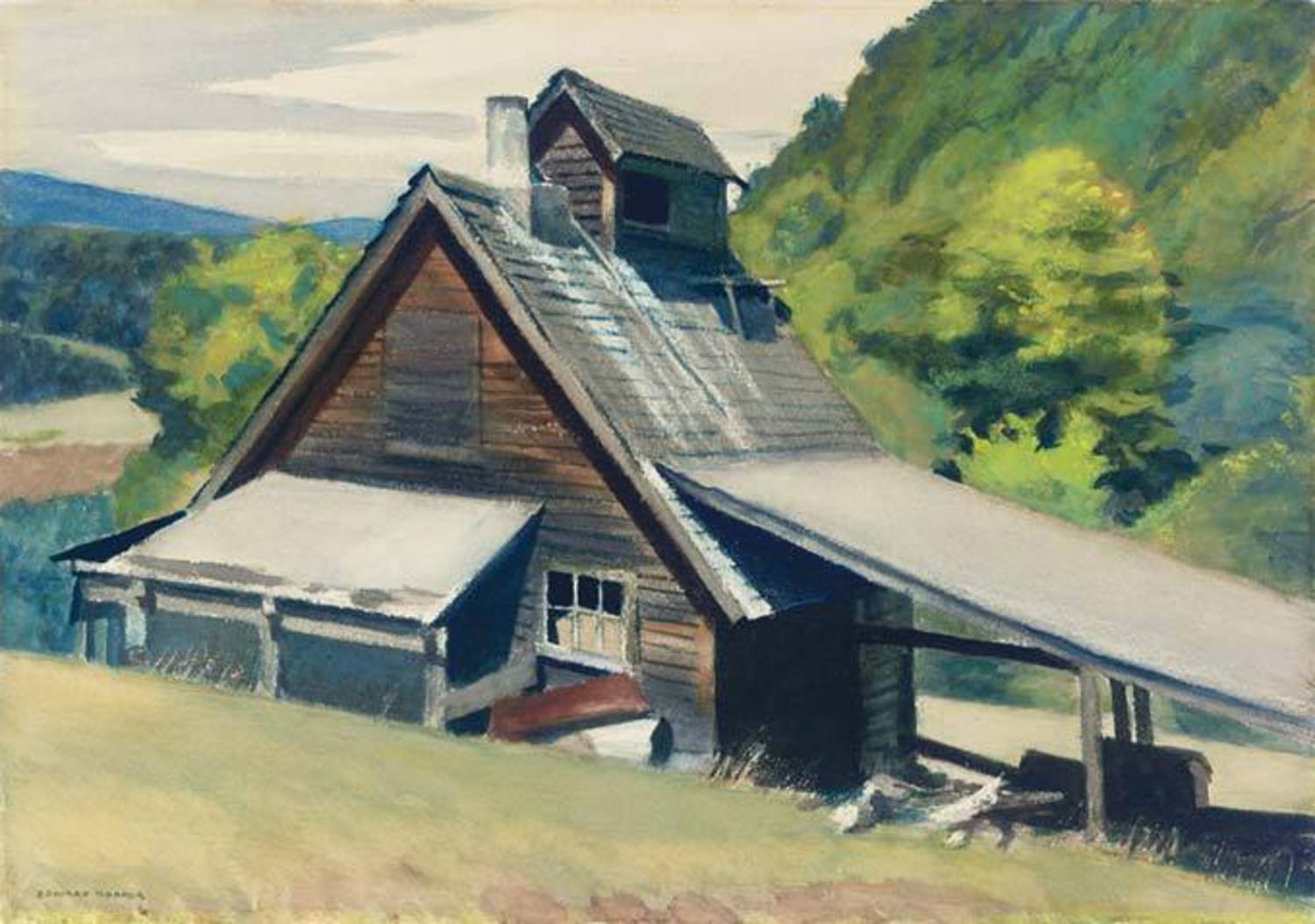

We will also be presenting some early machine-made drawings by the British artist Desmond Paul Henry, considered to be the first artist to have an exhibition of computer generated art. In 1961 Henry won first place in a contest sponsored in part by well-known British artist L.S. Lowery. The prize was a one-man show at The Reid Gallery in August, 1962, which Henry titled Ideographs. In the show, Henry included drawings produced by his first drawing machine from 1961 adapted from a wartime bombsight computer.

Untitled, Desmond Paul Henry, early 1960s

The show features other important works from the 1960s through the 1980s by pioneering artists like Vera Molnar, Nicolas Schoeffer, Frieder Nake, and Manfred Mohr.

We have several generative works from the early 1990s by John Maeda, former president of the prestigious Rhode Island School of Design (2008-2014) and associate director of research at MIT Media Lab. Though Maeda is an accomplished generative artist with works in major museums, his greatest contribution to generative art was perhaps his invention of a platform for artists and designers to explore programing called "Design By Numbers."

Casey Reas, one of Maeda’s star pupils at the MIT Media Lab, will share several generative sketches dating back to the early days of Processing. Reas is the co-creator of the Processing programing language (inspired by Maeda’s “Design By Numbers”) which has done more to increase the awareness and proliferation of generative art than any other singular contribution. Processing made generative art accessible to anyone in the world with a computer. You no longer needed expensive hardware, and more importantly, you did not need to be a computer scientist to program sketches and create generative art.

This ten minute presentation introduces the Process works created by Casey Reas from 2004-2010.

Among the most accomplished artists to ever use Processing are Jared Tarbell and Manolo Gamboa Naon, who will both be represented in the exhibition. Tarbell mastered the earliest releases of Processing, producing works of unprecedented beauty. Tarbell’s work appears to have grown from the soil rather than from a computer and looks as fresh and cutting edge today as it did in 2003.

Substrate, Jared Tarbell, 2003

Argentinian artist Manolo Gamboa Naon - better known as “Manolo” - is a master of color, composition, and complexity. Highly prolific and exploratory, Manolo creates work that takes visual cues from a dizzying array of aesthetic material from 20th century art to modern-day pop culture. Though varied, his work is distinct and immediately recognizable as consistently breaking the limits of what is possible in Processing.

aaaaa, Manolo Gamboa Naon, 2018

With the invention of new machine learning tools like DeepDream and GANs (generative adversarial networks), “AI art,” as it is commonly referred to, has become particularly popular in the last five years. One artist, Harold Cohen, explored AI and art for nearly 50 years before we saw the rising popularity of these new machine learning tools. In those five decades, Cohen worked on a single program called Aaron that involved teaching a robot to create drawings. Aaron’s education took a similar path to that of humans, evolving from simple pictographic shapes and symbols to more figurative imagery, and finally into full-color images. We will be including important drawings by Cohen and Aaron in the exhibition.

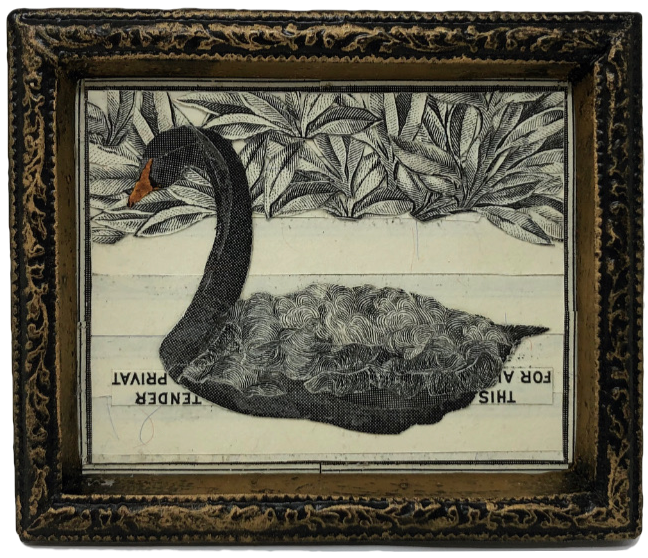

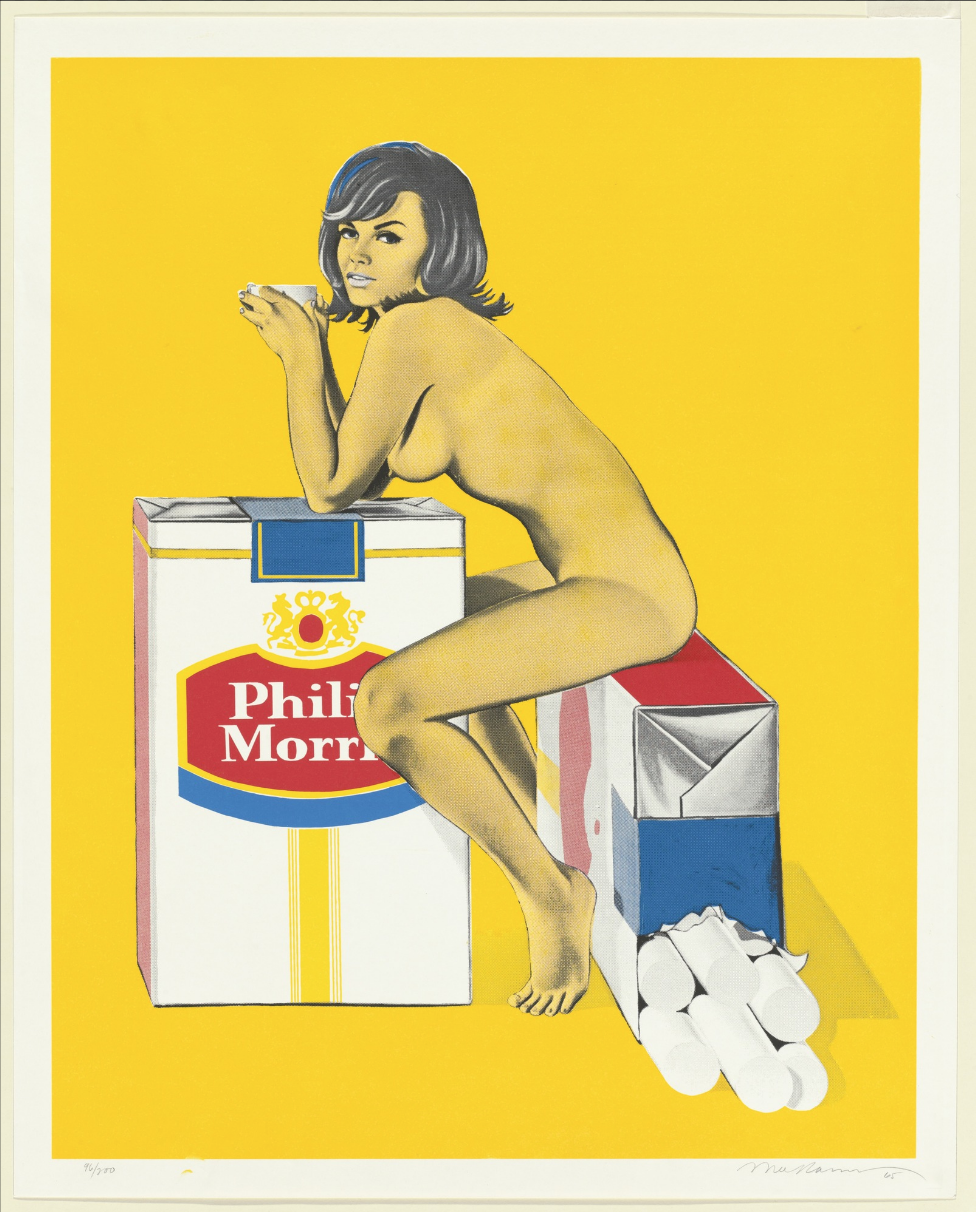

AI and machine learning have also added complexity to copyright, and in many ways, the laws are still catching up. We saw this when Christie’s sold an AI work in 2018 by the French collective Obvious for $432k that was based heavily on work by artist Robbie Barrat. Pioneering cyberfeminist Cornilia Sollfrank explored issues around generative art and copyright back in 2004 when a gallery refused to show her Warhol Flowers. The flowers were created using Sollfrank’s “Net Art Generator,” but the gallery claimed the images were too close to Warhol’s “original” works to show. Sollfrank who believes “a smart artist makes the machine do the work” believed she had a case that the images created by her program were sufficiently differentiated. Sollfrank responded to the gallery by recording conversations with four separate copyright attorneys and playing the videos simultaneously. In this act, Sollfrank raised legal and moral issues regarding the implications of machine authorship and copyright that we are still exploring today. We are excited to be including several of Sollfrank’s Warhol Flowers in the show.

Anonymous_warhol-flowers, Cornelia Sollfrank

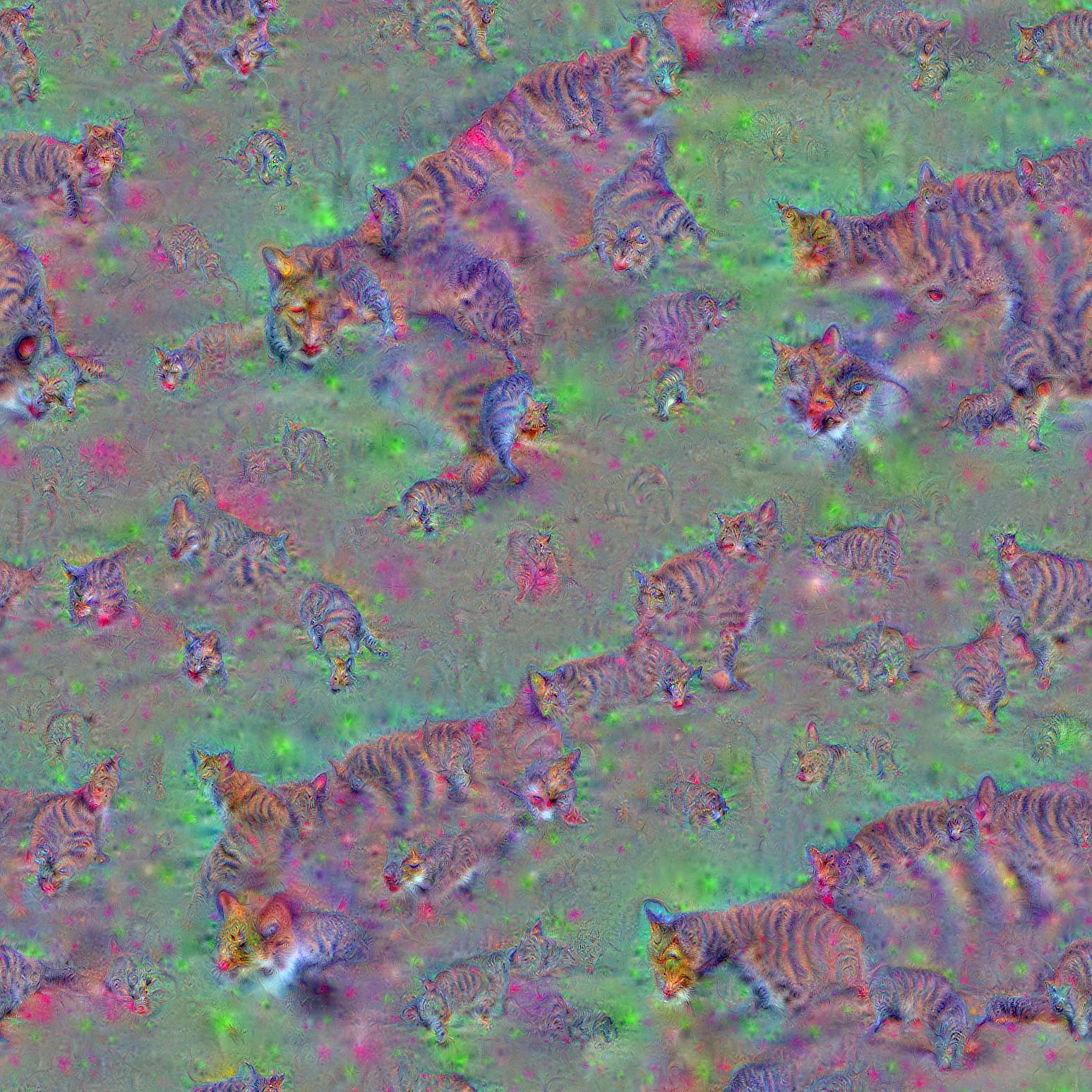

While we have gone to great lengths to focus on historical works, one of the show’s greatest strengths is the range of important works by contemporary AI artists. We start with one of the very first works by Google DeepDream inventor Alexander Mordvintsev. Produced in May of 2015, DeepDream took the world by storm with surreal acid-trip-like imagery of cats and dogs growing out of people’s heads and bodies. Virtually all contemporary AI artists credit Mordvintsev’s DeepDream as a primary source of inspiration for their interest in machine learning and art. We are thrilled to be including one of the very first images produced by DeepDream in the exhibition.

Cats, Alexander Morrdvintsev, 2015

The show also includes work by Tom White, Helena Sarin, David Young, Sofia Crespo, Memo Akten, Anna Ridler, Robbie Barrat, and Mario Klingemann.

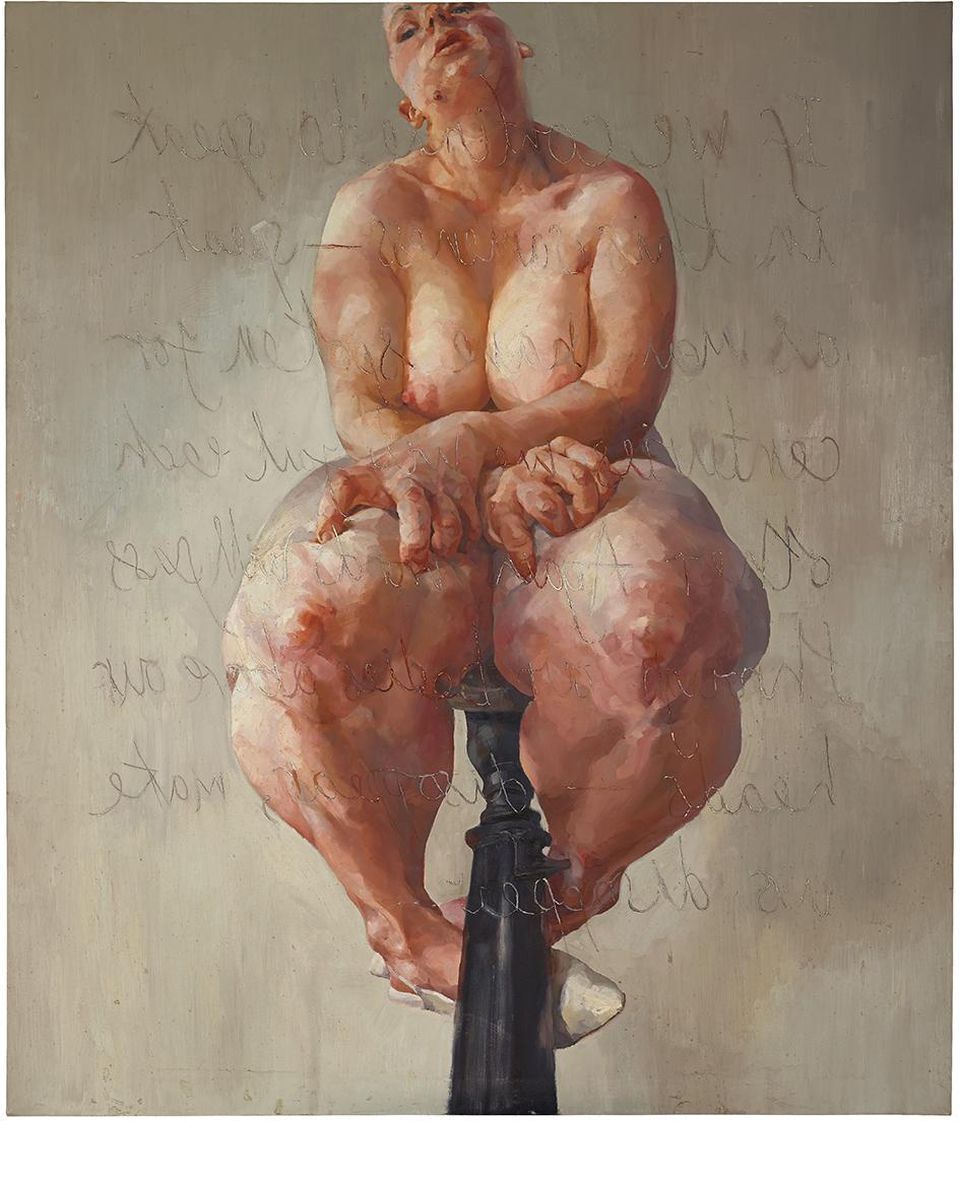

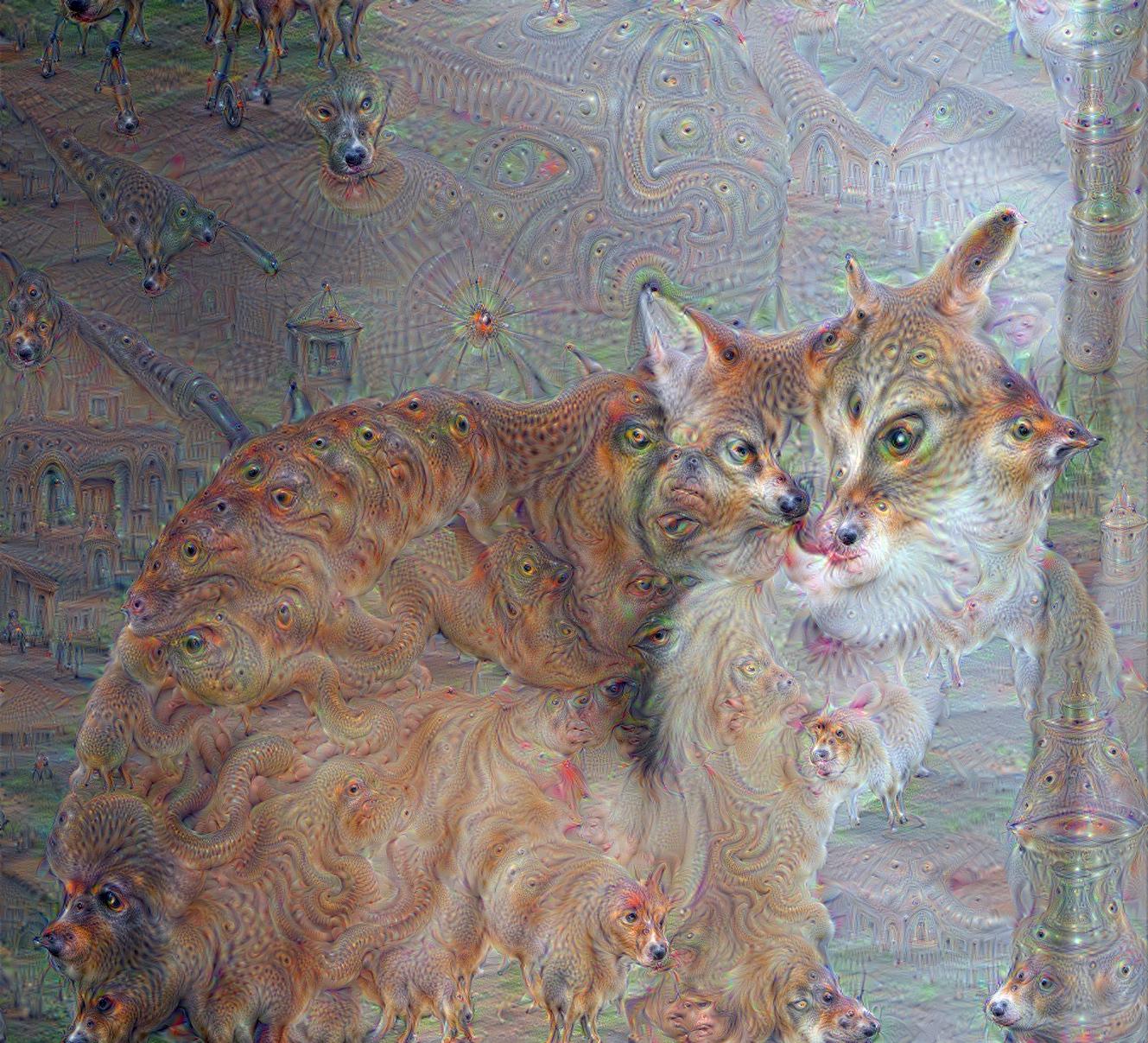

Klingemann will show his 2018 Lumen Prize-winning work The Butcher’s Son. The artwork is an arresting image that was created by training a chain of GANs to evolve a stick figure (provided as initial input) into a detailed and textured output. We are also excited to be showing Klingemann’s work 79543 self-portraits, which explores a feedback loop of chained GANs and is reminiscent of his Memories of Passersby which recently sold at Sotheby’s.

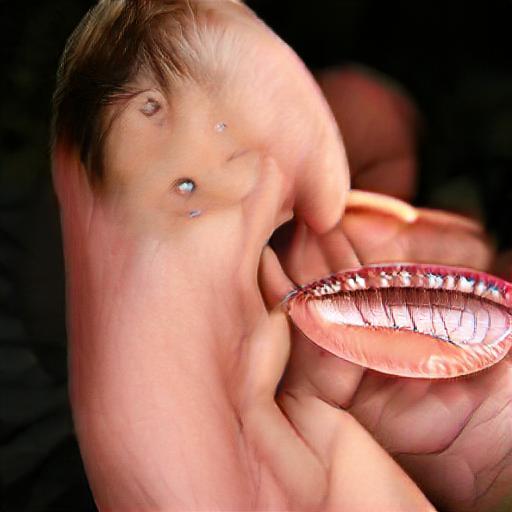

The Butcher’s Son, Mario Klingemann, 2018

Automat und Mensch takes place at the Kate Vass Galerie in Zürich Switzerland and will be accompanied by an educational program including lectures and panels from participating artists and thought leaders on AI art and generative art history. The show runs from May 29th to October 15th, 2019.

Participating Artists:

Herbert W. Franke

Gottfried Jäger

Desmond Paul Henry

Nicolas Schoeffer

Manfred Moor

Vera Molnar

Frieder Nake

Harold Cohen

Gottfried Honegger

Cornelia Sollfrank

John Maeda

Casey Reas

Jared Tarbell

Memo Akten

Mario Klingemann

Manolo Gamboa Naon

Helena Sarin

David Young

Anna Ridler

Tom White

Sofia Crespo

Matt Hall & John Watkinson

Primavera de Filippi

Robbie Barrat

Harm van den Dorpel

Roman Verostko

Kevin Abosch

Georg Nees

Alexander Mordvintsev

Benjamin Heidersberger

For further info and images, please do not hesitate to contact us at: info@katevassgalerie.com