Artnome turns two this month and we are celebrating by announcing two exciting new partnerships and a brilliant new board of advisors to help us navigate the next two years! Both our partnerships and our advisory board align perfectly with our two primary goals of improving the art historical record and promoting artists working at the intersection of art and tech through interviews and articles.

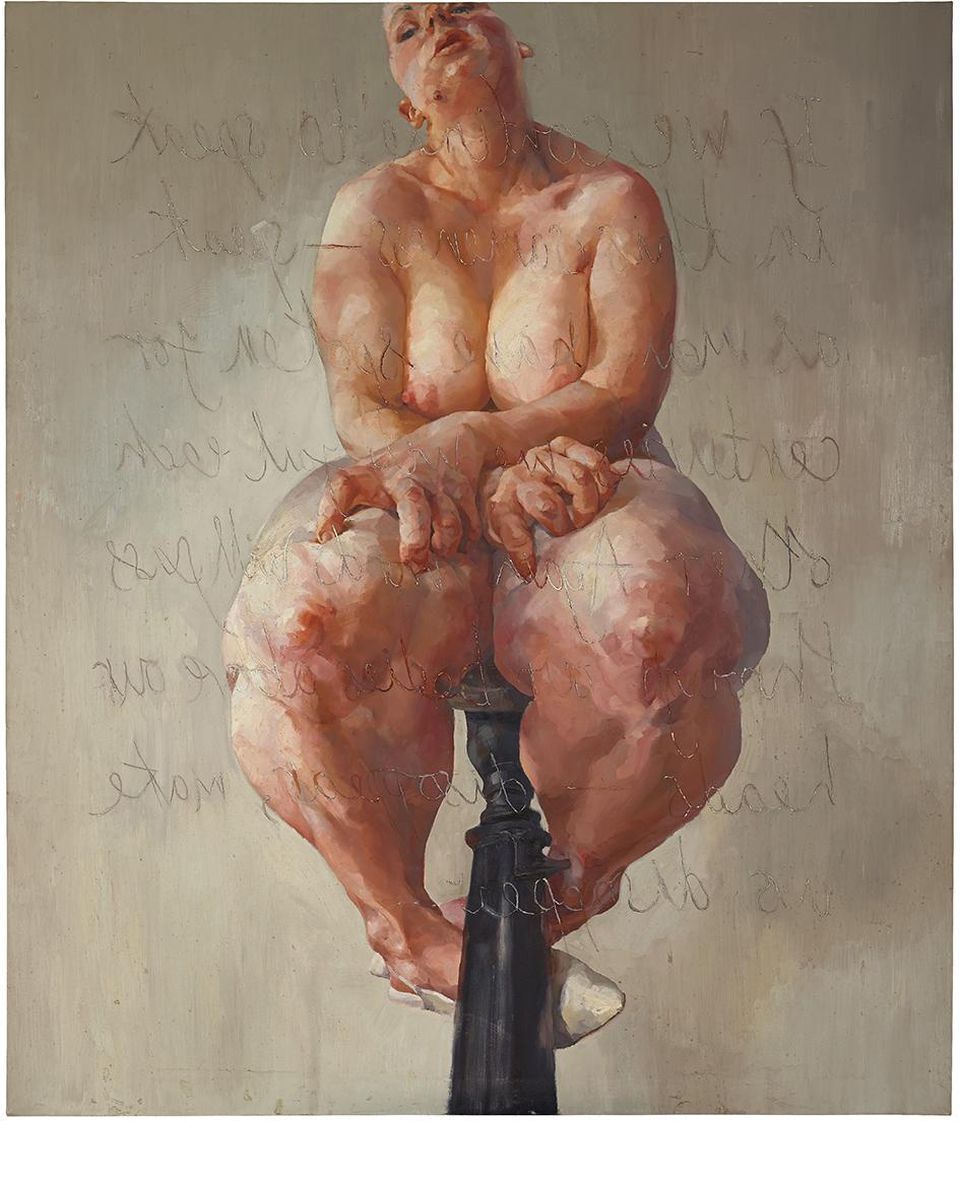

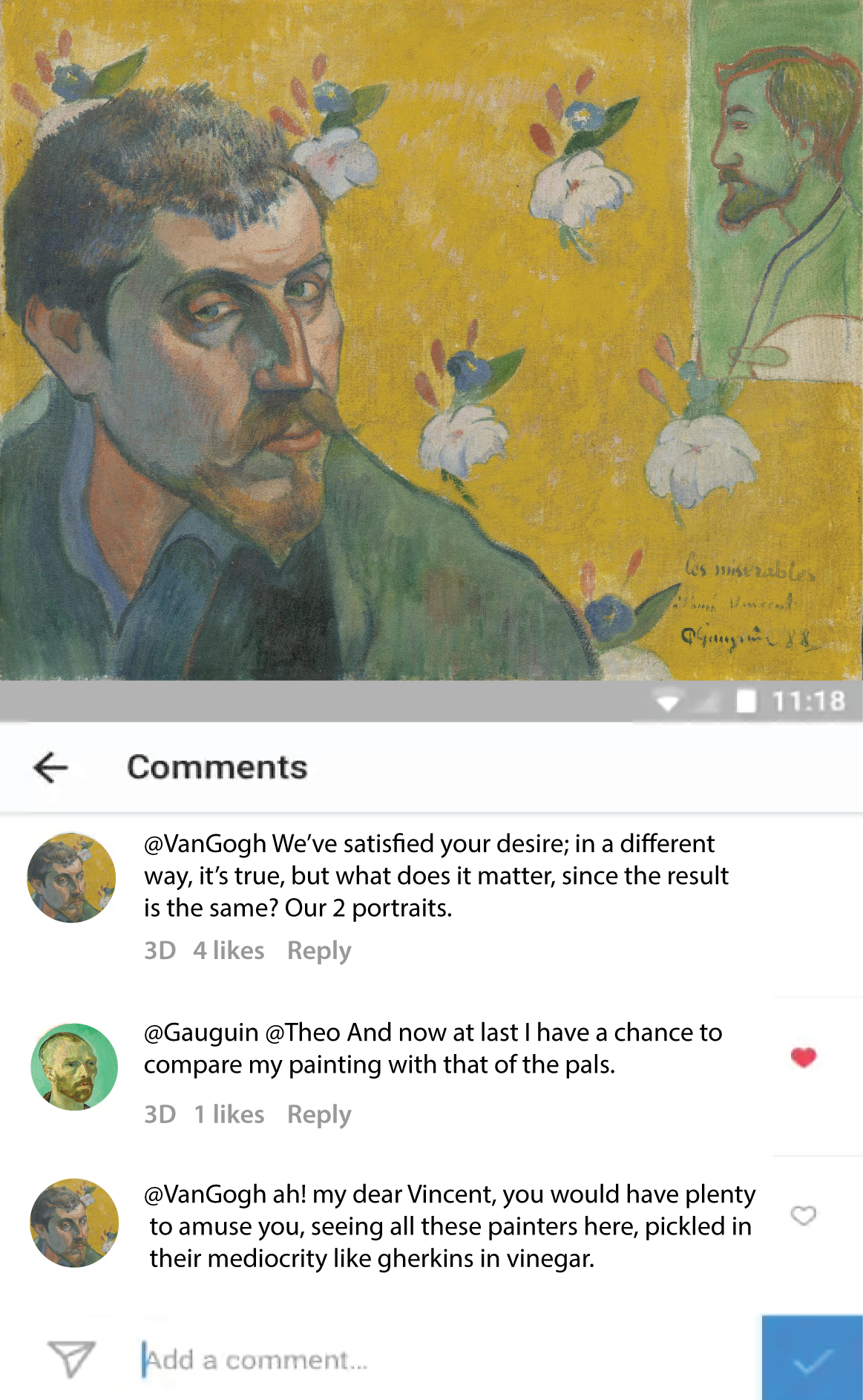

As many of you know, Artnome co-curated a show on the history of generative and AI art with Georg Bak called Automat und Mensch, which had a successful opening last month in Zurich.

From Left: Robbie Barrat, Herbert W. Franke, Mario Klingemann, Kate Vass, Jason Bailey, Georg Bak

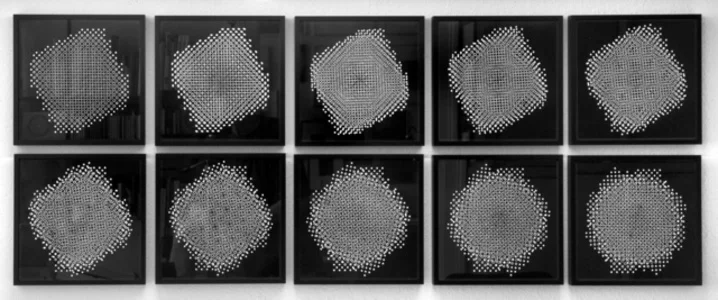

Working with Kate Vass and her team was a fantastic experience, and I am excited to announce our partnership, making Kate Vass Galerie the official gallery of Artnome! Kate’s most recent shows on blockchain art and generative art, respectively, line up perfectly with the topics and artists we cover and love.

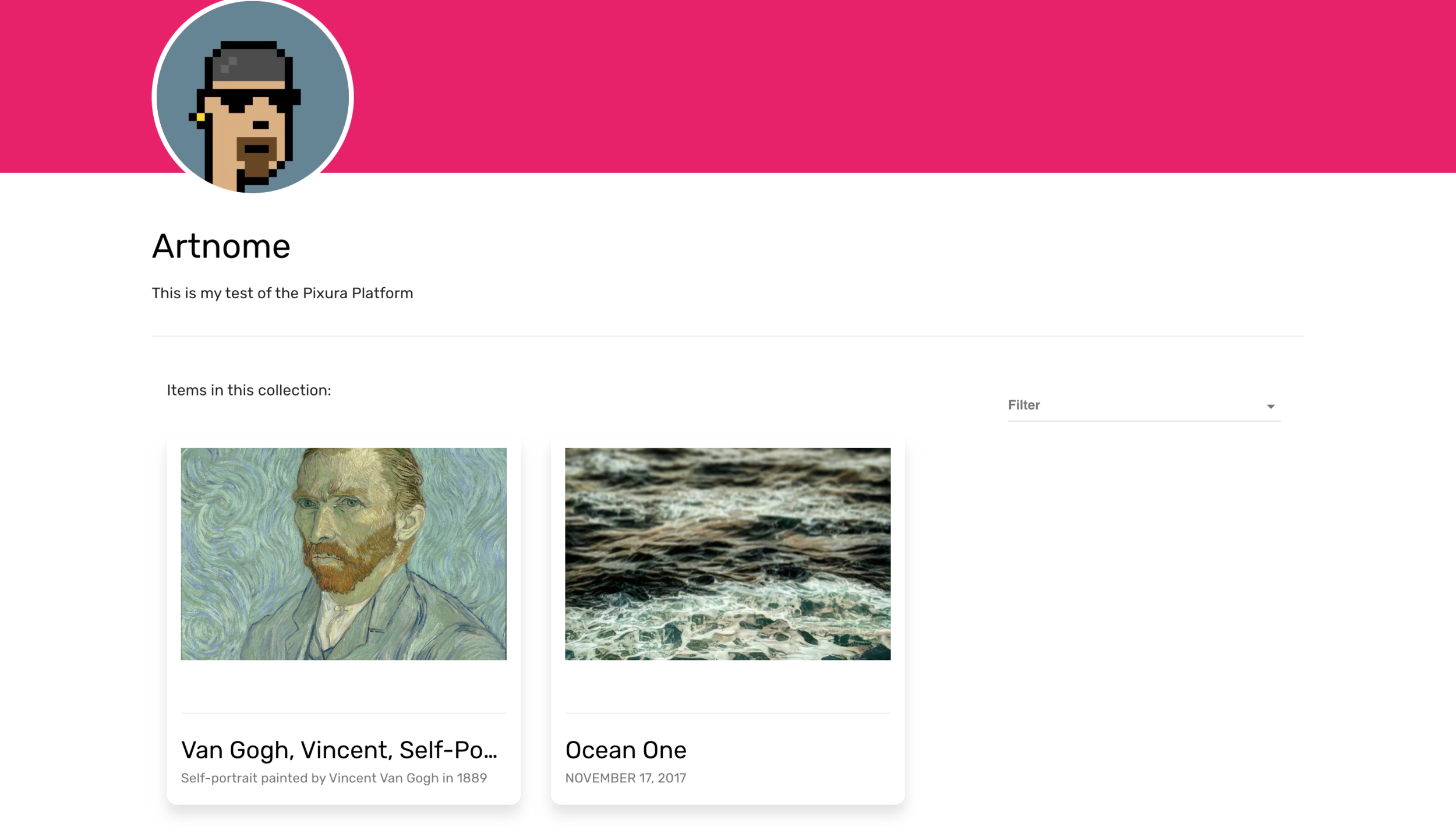

Under this new partnership I will continue to curate for the Kate Vass Galerie, make introductions for more up-and-coming artists, and will be linking to and advertising the Kate Vass Galerie as the official Artnome gallery. In keeping with both of our missions and goals, we are looking at adding a blockchain-based gallery to make collecting digital art accessible to the next generation of collectors.

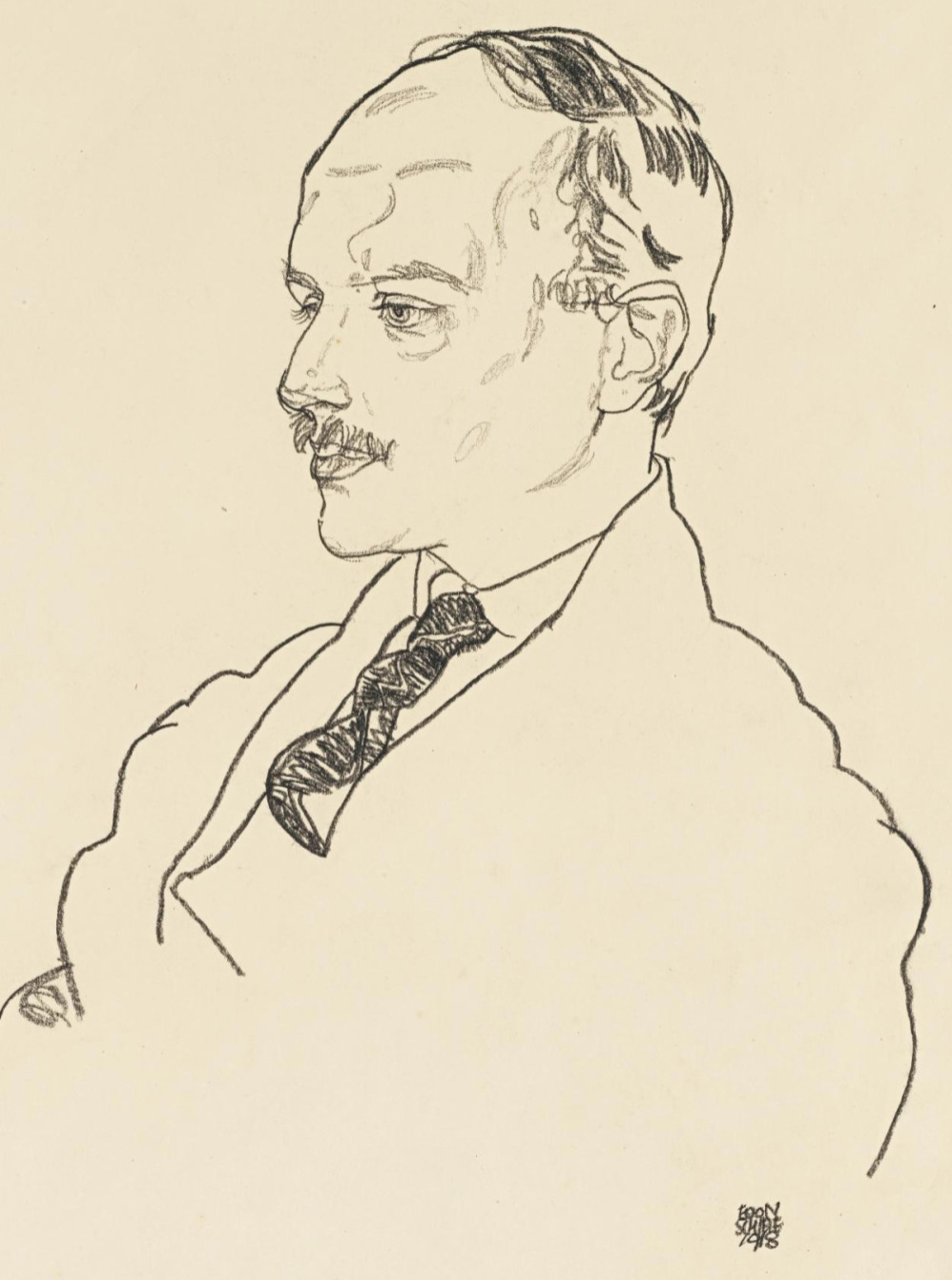

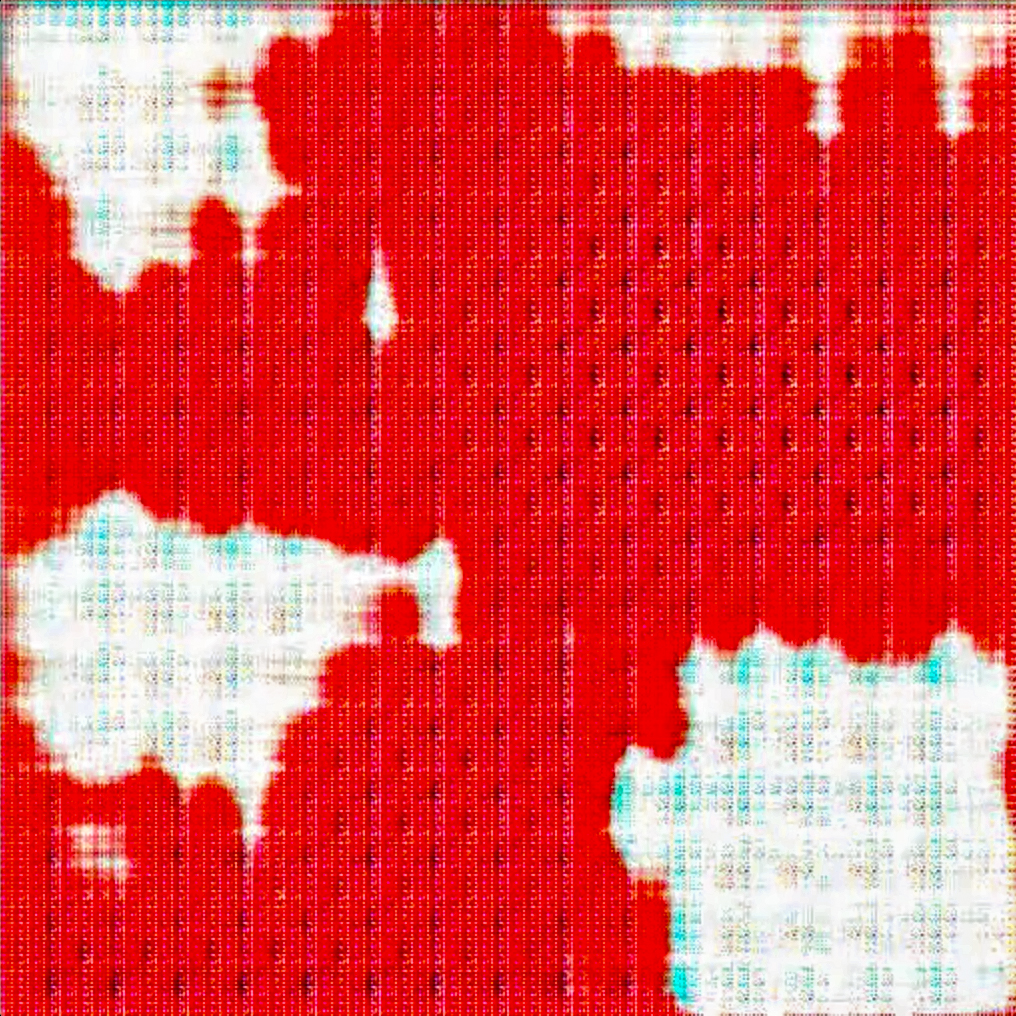

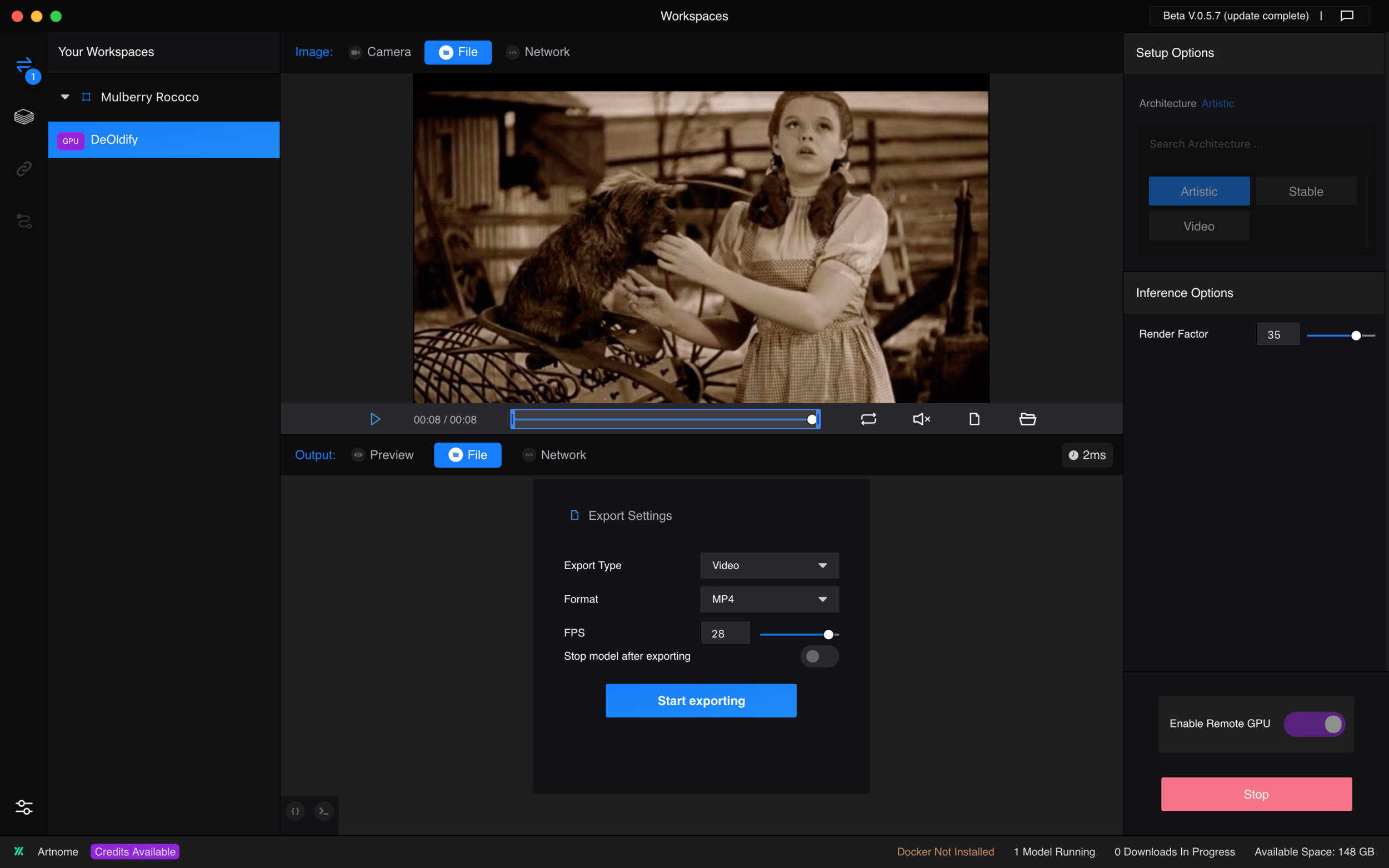

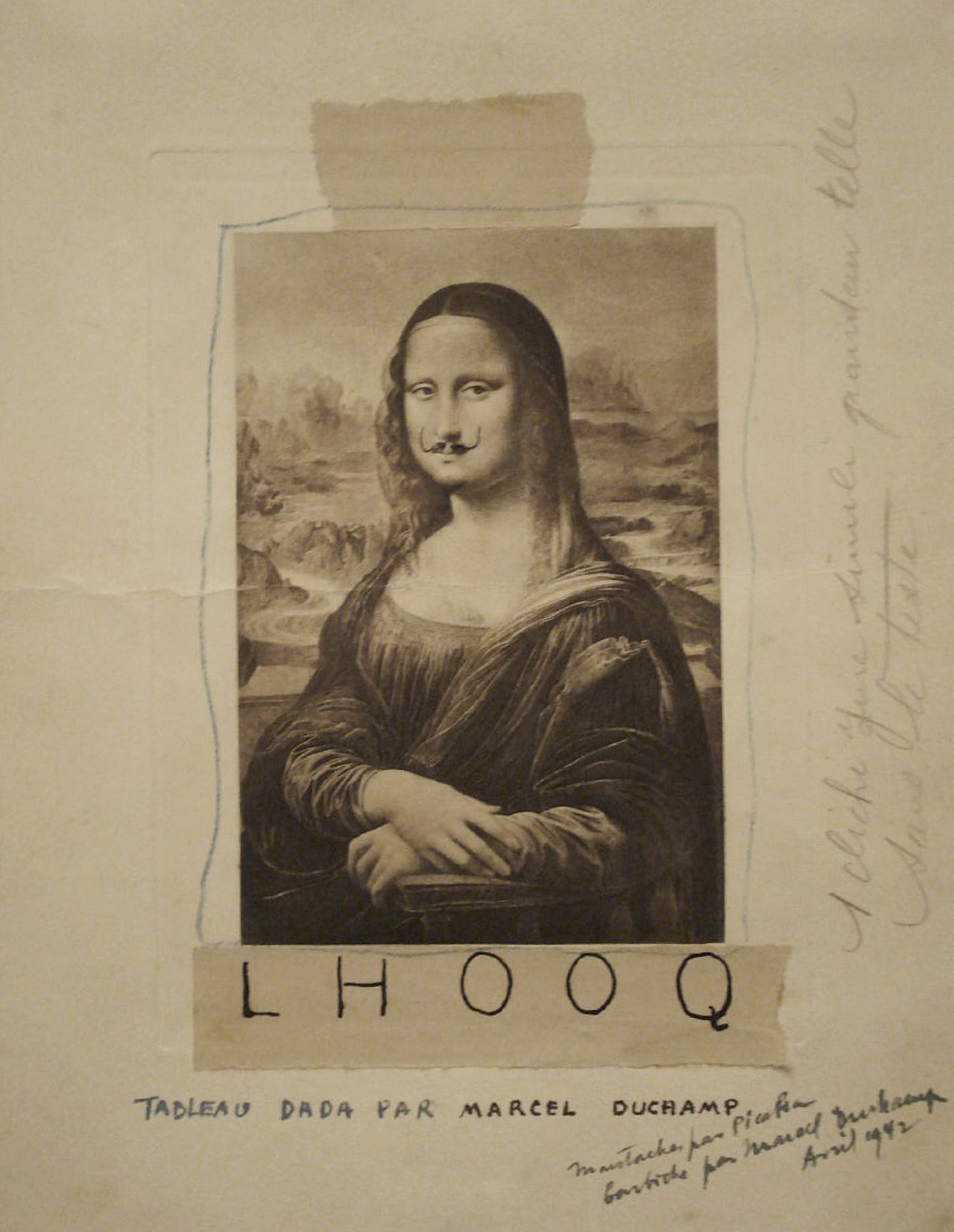

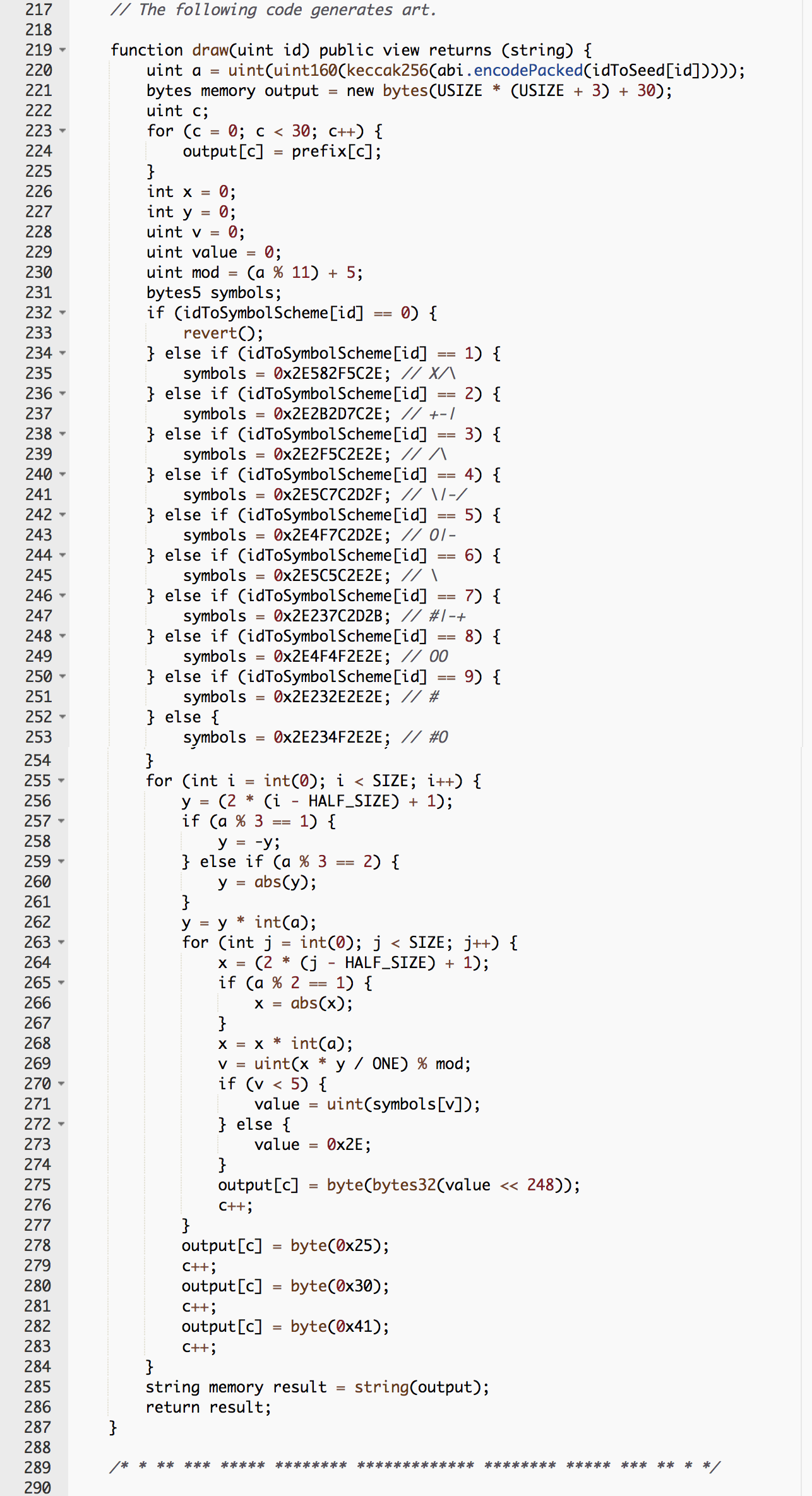

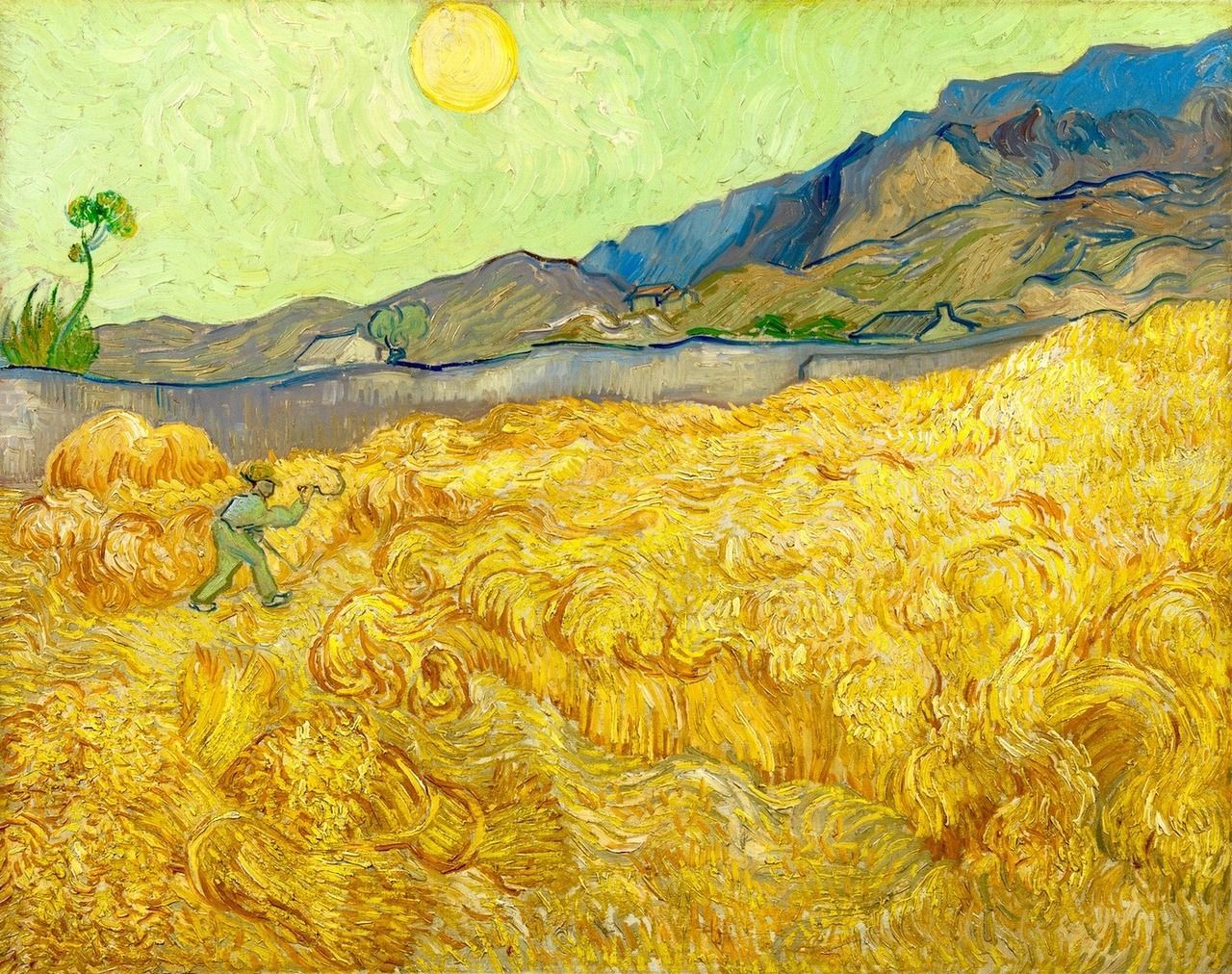

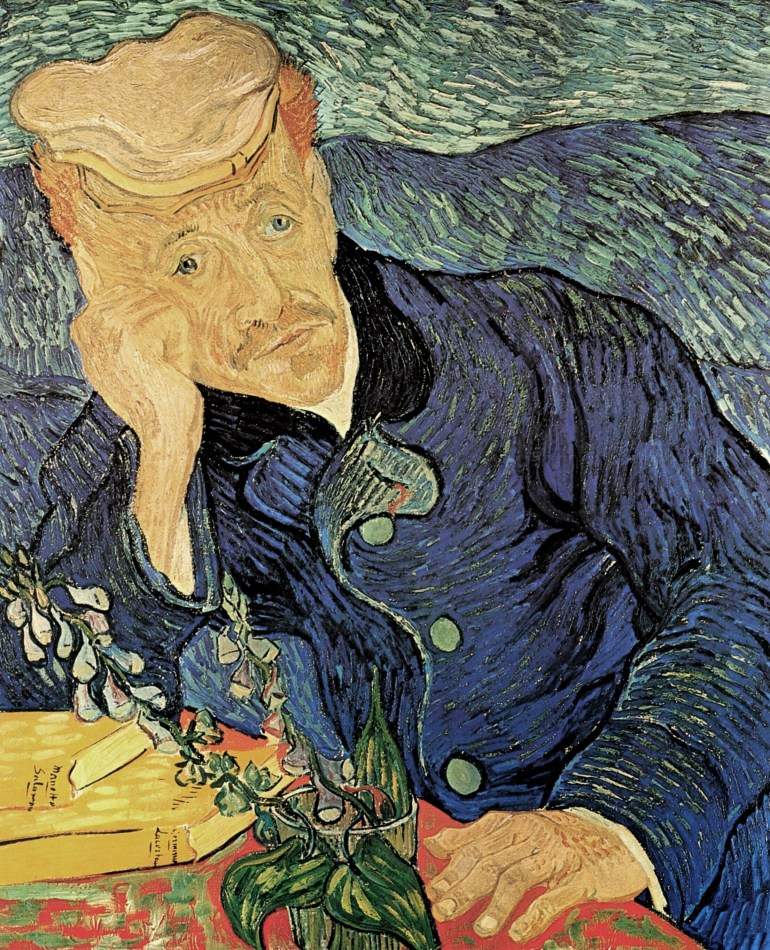

Artnome’s second partnership is with Art Recognition, another amazing Swiss company with strong female founders. Particle physics Ph.D. Dr. Carina Popovici and her co-founder Christiane Hoppe-Oehl have developed AI to assign the probability of forgery based on photographs of an artwork. Specifically designed to augment, not replace, human connoisseurship, Art Recognition produces a heat map alerting human experts to areas of potential concern. I could not be more thrilled to team up with Art Recognition to help improve authentication and fight forgery in the art market.

As Artnome’s primary partners, Kate Vass Galerie and Art Recognition provide funding to help offset the cost and time I put into writing articles for Artnome, gathering data, and creating analysis in order to make it freely available to the public. Put more simply, when you support Kate Vass Galerie and Art-Recognition, you directly support Artnome in our mission to fight art forgery and highlight underrepresented artists whom we feel deserve more attention!

Last but not least, I have asked an amazing list of industry experts, all of whom have already played important roles in the growth of Artnome over the last two years, to become formal advisors as Artnome moves forward and continues its growth. These are all people for whom I have the deepest respect and who have already provided critical guidance and opened up opportunities for Artnome.

Anne Bracegirdle, Former Associate Vice President, Art+Tech at Christie’s

Anne reached out to me in 2018 to invite me to moderate two panels at Christie’s inaugural Art + Tech event in London. That was a huge leap of faith, given I had zero experience presenting or moderating. Having “moderator at Christie’s” on my resume has opened countless doors and created new opportunities and friendships ever since. I was again invited by Anne to speak, this time on “AI and art” in 2019 which has further raised the profile of Artnome and helped to solidify our brand as experts in art and tech. Anne has great passion and commitment for the digital art space and will play a key role in raising its profile as “the” important genre for our generation.

I’ve had the pleasure of attending several conferences around the world with Bernadine, and she brings amazing energy and leadership everywhere she goes. Bernadine is the CEO and founder of Vastari, the largest online facilitator of international touring exhibitions and loans between private collectors, museums, and exhibition producers. Vastari facilitated over 456 matches of content to venues in 2018 and works with thousands of museums around the world, including eight of the top ten most visited.

Nanne Dekking, CEO & Founder at Artory and Chairman of the Board of TEFAF

I’ve had the pleasure of sharing the stage with Nanne at several conferences, and we immediately hit it off based on our common mission and principles. Nanne is an eloquent advocate for change in the international art market and is using his long art career to improve the art historical record and to bring transparency to the art world. As Founder and CEO of Artory, Nanne has developed the first standardized data collection solution by the art world, for the art world.

Nanne was asked two years ago to become the chairman of the board of trustees of TEFAF. In this role, he has greatly contributed to make vetting rules for authentication and selection criteria more transparent and to avoid any commercial interest. Prior to Artory, Nanne held senior roles with Sotheby’s and Wildenstein & Co.

Jessica Fjeld, Assistant Director, Cyberlaw Clinic, Harvard Law School

Though I did not anticipate it, I have met many talented art lawyers since starting Artnome. None of them came across as understanding art, law, and tech as well as Jessica has. I interviewed Jessica for a recent article I wrote exploring why copyright for AI art is so complicated. She navigated the intersection of art, tech, and law with ease while putting even the most complex aspects in terms that anyone could understand. I am thrilled to have her unique perspective and invaluable advice moving forward.

Ahmed Hosny, Machine Learning Research Scientist, Dana-Farber Cancer Institute

Ahmed’s “Green Canvas” project was the inspiration for my interests in machine learning and art, and he is solely responsible for my initial explorations of blockchain and art. Ahmed is a tech visionary and is the first person I go to when I run out of ideas or have questions about new technologies and how they work. You can learn about his amazing work using machine learning to identify and treat cancer and other important projects on his website or on his LinkedIn profile.

Marion Maneker, Editorial Director at Art Media Holdings (Penske Media Corporation)

I cold called/emailed Marion several years ago after stalking him on his excellent site Art Market Monitor to tell him I had the world’s largest database of complete works. Rather than hang up on me, he agreed to meet with me in person and educated me on the art market. Several years later, he is still educating me, and it has shaped the analytics I am developing from my data to make them far more relevant than they otherwise would be. If you are interested in the art market, Marion’s site is the best place to learn about it and to follow important news and developments.

Thanks for your support over the last two years! If you have questions, suggestions, or concerns you can always reach me at jason@artnome.com.